What Assumptions Are Required for the Two Sample T-test Presented Here to Be Appropriate?

- Research article

- Open up Access

- Published:

To test or non to test: Preliminary assessment of normality when comparing two independent samples

BMC Medical Research Methodology book 12, Article number:81 (2012) Cite this article

Abstract

Groundwork

Student's two-sample t test is generally used for comparing the ways of two independent samples, for example, ii handling artillery. Under the null hypothesis, the t test assumes that the 2 samples arise from the aforementioned commonly distributed population with unknown variance. Adequate control of the Type I error requires that the normality assumption holds, which is often examined by means of a preliminary Shapiro-Wilk test. The following 2-phase process is widely accepted: If the preliminary test for normality is not significant, the t test is used; if the preliminary test rejects the null hypothesis of normality, a nonparametric test is practical in the main assay.

Methods

Equally sized samples were drawn from exponential, uniform, and normal distributions. The 2-sample t exam was conducted if either both samples (Strategy I) or the collapsed gear up of residuals from both samples (Strategy II) had passed the preliminary Shapiro-Wilk examination for normality; otherwise, Mann-Whitney's U exam was conducted. By simulation, we separately estimated the conditional Type I error probabilities for the parametric and nonparametric function of the 2-stage procedure. Finally, we assessed the overall Type I error rate and the ability of the two-stage procedure equally a whole.

Results

Preliminary testing for normality seriously contradistinct the conditional Type I error rates of the subsequent main analysis for both parametric and nonparametric tests. Nosotros discuss possible explanations for the observed results, the about important one being the choice machinery due to the preliminary test. Interestingly, the overall Type I error rate and power of the unabridged 2-stage procedure remained inside acceptable limits.

Decision

The two-stage procedure might exist considered incorrect from a formal perspective; nevertheless, in the investigated examples, this procedure seemed to satisfactorily maintain the nominal significance level and had adequate ability backdrop.

Groundwork

Statistical tests have go more and more of import in medical research [1–3], but many publications have been reported to contain serious statistical errors [4–x]. In this regard, violation of distributional assumptions has been identified as 1 of the nearly common problems: According to Olsen [nine], a frequent mistake is to apply statistical tests that assume a normal distribution on data that are actually skewed. With minor samples, Neville et al. [x] considered the use of parametric tests erroneous unless a test for normality had been conducted before. Similarly, Strasak et al. [7] criticized that contributors to medical journals often failed to examine and study that assumptions had been met when conducting Student'due southt examination.

Probably ane of the most popular inquiry questions is whether two independent samples differ from each other. Altman, for example, stated that "virtually clinical trials yield data of this type, as practice observational studies comparing dissimilar groups of subjects" ([11], p. 191). In Student'southt test, the expectations of ii populations are compared. The test assumes independent sampling from normal distributions with equal variance. If these assumptions are met and the null hypothesis of equal population ways holds true, the test statistic T follows a t distribution with n X +n Y – ii degrees of freedom:

(1)

where m X and m Y are the observed sample ways, n X and due north Y are the sample sizes of the two groups, and s is an gauge of the common standard deviation. If the assumptions are violated, T is compared with the wrong reference distribution, which may issue in a deviation of the actual Blazon I error from the nominal significance level [12, 13], in a loss of power relative to other tests developed for similar issues [14], or both. In medical enquiry, unremarkably distributed data are the exception rather than the rule [xv, 16]. In such situations, the use of parametric methods is discouraged, and nonparametric tests (which are likewise referred to equally distribution-free tests) such every bit the two-sample Mann–Whitney U test are recommended instead [eleven, 17].

Guidelines for contributions to medical journals emphasize the importance of distributional assumptions [eighteen, 19]. Sometimes, special recommendations are provided. When addressing the question of how to compare changes from baseline in randomized clinical trials if data do not follow a normal distribution, Vickers, for case, ended that such data are best analyzed with analysis of covariance [twenty]. In clinical trials, a detailed description of the statistical analysis is mandatory [21]. This description requires practiced noesis about the clinical endpoints, which is often limited. Researchers, therefore, tend to specify alternative statistical procedures in case the underlying assumptions are non satisfied (east.g., [22]). For the t exam, Livingston [23] presented a list of conditions that must be considered (eastward.g., normal distribution, equal variances, etc.). Consequently, some researchers routinely bank check if their information fulfill the assumptions and alter the assay method if they do non (for a review, encounter [24]).

In a preliminary test, a specific assumption is checked; the upshot of the pretest and so determines which method should be used for assessing the primary hypothesis [25–28]. For the paired t exam, Freidlin et al. ([29], p. 887) referred to every bit "a natural adaptive procedure (…) to first employ the Shapiro-Wilk test to the differences: if normality is accustomed, the t test is used; otherwise the Wilcoxon signed ranked examination is used." Similar ii-stage procedures including a preliminary test for normality are mutual for two-sample t tests [thirty, 31]. Therefore, conventional statistical practice for comparing continuous outcomes from ii independent samples is to employ a pretest for normality (H0: "The true distribution is normal" against H1: "The true distribution is non-normal") at significance level α pre before testing the primary hypothesis. If the pretest is not pregnant, the statistic T is used to test the primary hypothesis of equal population means at significance level α . If the pretest is significant, Mann-Whitney's U test may exist applied to compare the two groups. Such a two-phase procedure ( Boosted file ane) appears logical, and goodness-of-fit tests for normality are oftentimes reported in manufactures [32–35].

Some authors take recently warned against preliminary testing [24, 36–45]. First of all, theoretical drawbacks exist with regard to the preliminary testing of assumptions. The basic difficulty of a typical pretest is that the desired result is often the acceptance of the nil hypothesis. In practice, the conclusion most the validity of, for example, the normality supposition is so implicit rather than explicit: Because bereft evidence exists to turn down normality, normality volition be considered true. In this context, Schucany and Ng [41] speak near a "logical problem". Farther critiques of preliminary testing focused on the fact that assumptions refer to characteristics of populations and not to characteristics of samples. In particular, small to moderate sample sizes practise not guarantee matching of the sample distribution with the population distribution. For example, Altman ([xi], Figure 4.7, p. threescore) showed that even sample sizes of 50 taken from a normal distribution may look non-normal. Second, some preliminary tests are accompanied by their ain underlying assumptions, raising the question of whether these assumptions likewise need to exist examined. In addition, fifty-fifty if the preliminary exam indicates that the tested assumption does non hold, the actual test of involvement may still be robust to violations of this supposition. Finally, preliminary tests are usually applied to the same data as the subsequent test, which may issue in uncontrolled fault rates. For the 1-sample t test, Schucany and Ng [41] conducted a simulation study of the consequences of the 2-stage selection procedure including a preliminary examination for normality. Data were sampled from normal, uniform, exponential, and Cauchy populations. The authors estimated the Type I error rate of the 1-sample t examination, given that the sample had passed the Shapiro-Wilk test for normality with a p value greater than α pre. For exponentially distributed data, the provisional Blazon I error charge per unit of the main examination turned out to exist strikingly above the nominal significance level and even increased with sample size. For two-sample tests, Zimmerman [42–45] addressed the question of how the Blazon I mistake and power are modified if a researcher'southward choice of test (i.due east., t test for equal versus diff variances) is based on sample statistics of variance homogeneity. Zimmerman concluded that choosing the pooled or carve up variance version of the t test solely on the inspection of the sample data does neither maintain the significance level nor protect the ability of the procedure. Rasch et al. [39] assessed the statistical backdrop of a three-stage procedure including testing for normality and for homogeneity of the variances. The authors ended that assumptions underlying the two-sample t exam should not be pre-tested because "pre-testing leads to unknown concluding Type I and Type II risks if the respective statistical tests are performed using the aforementioned set of observations". Interestingly, none of the studies cited above explicitly addressed the unconditional error rates of the two-stage procedure as a whole. The studies rather focused on the conditional mistake rates, that is, the Type I and Type Ii error of single arms of the ii-stage procedure.

In the nowadays study, nosotros investigated the statistical backdrop of Student'st test and Isle of man-Whitney'south U test for comparing two independent groups with different choice procedures. Similar to Schucany and Ng [41], the tests to exist applied were chosen depending on the results of the preliminary Shapiro-Wilk tests for normality of the ii samples involved. Nosotros thereby obtained an estimate of the conditional Type I error rates for samples that were classified as normal although the underlying populations were in fact not-normal, and vice-versa. This probability reflects the error rate researchers may face up with respect to the primary hypothesis if they mistakenly believe the normality assumption to exist satisfied or violated. If, in addition, the power of the preliminary Shapiro-Wilk exam is taken into business relationship, the potential impact of the entire two-stage procedure on the overall Type I mistake rate and power can be directly estimated.

Methods

In our simulation study, equally sized samples for two groups were fatigued from 3 different distributions, covering a variety of shapes of data encountered in clinical inquiry. Two selection strategies were examined for the main test to be applied. In Strategy I, the ii-sample t examination was conducted if both samples had passed the preliminary Shapiro-Wilk examination for normality; otherwise, we applied Mann-Whitney'southward U exam. In Strategy II, the t examination was conducted if the residuals from both samples had passed the pretest; otherwise, nosotros used the U test. The difference betwixt the two strategies is that, in Strategy I, the Shapiro-Wilk examination for normality is separately conducted on raw data from each sample, whereas in Strategy Two, the preliminary examination is practical only once, i.due east. to the collapsed set of residuals from both samples.

Statistical language R 2.14.0 [46] was used for the simulations. Random sample pairs of size due north 10 =n Y = 10, xx, 30, 40, 50 were generated from the following distributions: (ane) exponential distribution with unit expectation and variance; (2) uniform distribution in [0, 1]; and (3) the standard normal distribution. This procedure was repeated until 10,000 pairs of samples had passed the preliminary screening for normality (either Strategy I or II, with α pre = .100, .050, .010, .005, or no pretest). For these samples, the null hypothesis μ X =μ Y was tested against the culling μ X ≠μ Y using Educatee'due southt test at the two-sided significance level α = .05. The conditional Type I errors rates (left arm of the decision tree in Additional file i) were then estimated past the number of significant t tests divided by x,000. The precision of the results thereby amounts to maximally ±i% (width of the 95% confidence interval for proportion 0.five). In a second run, sample generation was repeated until 10,000 pairs were nerveless that had failed preliminary screening for normality (Strategy I or II), and the provisional Type I error was estimated for Mann-Whitney'due south U examination (right part of Additional file 1).

Finally, 100,000 pairs of samples were generated from exponential, uniform, and normal distributions to assess the unconditional Blazon I mistake of the unabridged ii-phase process. Depending on whether the preliminary Shapiro-Wilk test was significant or non, Mann-Whitney'south U examination or Student'southt test was conducted for the principal analysis. The Type I error charge per unit of the unabridged two-stage procedure was estimated by the number of significant tests (t or U ) and division past 100,000.

Results

Strategy I

The starting time strategy required both samples to pass the preliminary screening for normality to proceed with the two-sample t test; otherwise, we used Mann-Whitney'southward U exam. This strategy was motivated by the well-known assumption that the two-sample t test requires data within each of the two groups to exist sampled from usually distributed populations (due east.g., [11]).

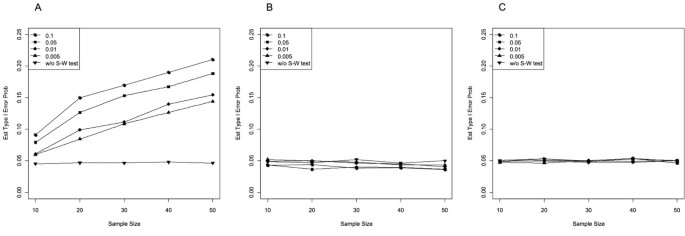

Tabular array 1 (left) summarizes the estimated provisional Blazon I error probabilities of the standard 2-sample t test (i.e., t examination assuming equal variances) at the ii-sided nominal level α = .05 after both samples had passed the Shapiro-Wilk test for normality, as well as the unconditional Blazon I error rate of the t test without a pretest for normality. Figure ane additionally plots the corresponding estimates if the underlying distribution was either (A) exponential, (B) uniform, or (C) normal. As can exist seen from Table 1 and Figure ane, the unconditional two-sample t test (i.e., without pretest) was α -robust, even if the underlying distribution was exponential or compatible. In dissimilarity, the observed conditional Type I fault rates differed from the nominal significance level. For the exponential distribution, the selective application of the two-sample t examination to pairs of samples that had been accepted equally normal led to Type I error rates of the final t test that were considerably larger than α = .05 (Figure 1A). Moreover, the violation of the significance level increased with sample size and α pre. For example, for n = 30, the observed Type I error rates of the ii-sample t examination turned out to exist 10.8% for α pre = .005 and even 17.0% for α pre = .100, whereas the unconditional Type I mistake rate was 4.vii%. If the underlying distribution was uniform, the conditional Type I mistake rates declined below the nominal level, specially as samples became larger and preliminary significance levels increased (Effigy 1B). For normally distributed populations, conditional and unconditional Blazon I mistake rates roughly followed the nominal significance level (Figure 1C).

Estimated Type I error probability of the two-sample t test at α = .05 after both samples had passed the Shapiro-Wilk exam for normality at α pre = .100, .050, .010, .005 (conditional), and without pretest (unconditional). Samples of equal size from the (A) exponential, (B) uniform, and (C) normal distribution.

For pairs in which at least one sample had not passed the pretest for normality, we conducted Mann-Whitney's U examination. The estimated conditional Type I error probabilities are summarized in Tabular array 1 (right): For exponential samples, just a negligible tendency towards conservative decisions was observed, but samples from the uniform distribution, and, to a lesser extent, samples from the normal distribution proved problematic. In dissimilarity to the pattern observed for the provisional t test, nonetheless, the nominal significance level was mostly violated in pocket-sized samples and numerically low significance levels of the pretest (e.g., α pre = .005).

Strategy Two

The two-sample t test is a special example of a linear model that assumes independent commonly distributed errors. Therefore, the normality supposition can be examined through residuals instead of raw data. In linear models, residuals are defined as differences between observed and expected values. In the two-sample comparison, the expected value for a measurement corresponds to the mean of the sample from which information technology derived, so that the remainder simplifies to the deviation between the observed value and the sample mean. In regression modeling, the assumption of normality is oftentimes checked past the plotting of residuals after parameter estimation. Yet, this order may be reversed, and formal tests of normality based on residuals may exist carried out. In Strategy 2, 1 single Shapiro-Wilk test was applied to the collapsed fix of residuals from both samples; thus, in contrast to Strategy I, only one pretest for normality had to be passed.

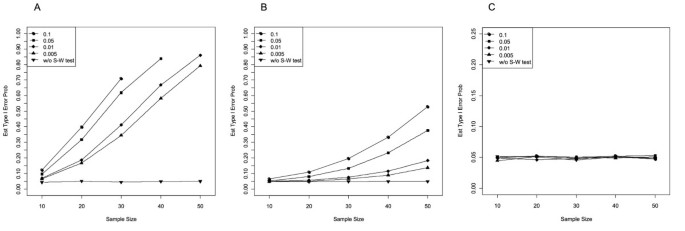

Table two (left) and Figure two show the estimated conditional Type I error probabilities of the two-sample t test at α = .05 (two-sided) after residuals had passed the Shapiro-Wilk test for the iii different underlying distributions and for different α pre levels equally well equally the corresponding unconditional Type I fault rates (i.e., without pretest). For the normal distribution, the provisional and the unconditional Blazon I mistake rates were very close to the nominal significance level for all sample sizes and α pre levels considered. Thus, if the underlying distribution was normal, the preliminary Shapiro-Wilk test for normality of the residuals did non affect the Type I error probability of the subsequent 2-sample t examination.

Estimated Type I error probability of the two-sample t test at α = .05 after the residuals had passed the Shapiro-Wilk examination for normality at α pre = .100, .050, .010, .005 (provisional), and without pretest (unconditional). Samples of equal size from the (A) exponential, (B) compatible, and (C) normal distribution.

For the 2 other distributions, the results were strikingly different. For samples from the exponential distribution, conditional Type I error rates were much larger than the nominal significance level (Figure 2A). For example, at α pre = .005, provisional Blazon I error rates ranged between 6.four% for n = 10 up to 79.2% in samples of n = 50. For the largest preliminary α pre level of .100, samples of n = 30 reached error rates above 70%. Thus, the discrepancy between the observed Blazon I error charge per unit and the nominal α was even more than pronounced than for Strategy I and increased again with growing preliminary α pre and increasing sample size.

Surprisingly and in remarkable contrast to the results observed for Strategy I, samples from the uniform distribution that had passed screening for normality of residuals likewise led to provisional Blazon I error rates that were far above five% (Figure 2B). The distortion of the Type I error charge per unit was merely slightly less extreme for the uniform than for the exponential distribution, resulting in fault rates upwardly to 50%. The provisional Type I error rate increased again with growing sample size and increasing preliminary significance level of the Shapiro-Wilk test. For example, at α pre = .100, provisional Blazon I error rates were between six.5% for n = 10 and fifty-fifty 52.nine% for northward = fifty. Similarly, in samples of due north = l, the conditional Type I error charge per unit was between 13.8% for α pre = .005 and 52.nine% for α pre = .100, whereas the Blazon I error rate without pretest was shut to 5.0%.

If the distribution of the residuals was judged as non-normal by the preliminary Shapiro-Wilk examination, the two samples were compared by ways of Mann-Whitney'southward U exam (Table 2, right). Equally for Strategy I, the Blazon I error rate of the conditional U examination was closest to the nominal α for samples from the exponential distribution. For samples from the uniform distribution, the U test did not fully exhaust the significance level but showed remarkably anti-conservative beliefs for samples drawn from the normal distribution, which was most pronounced in small samples and numerically depression α pre.

Unabridged ii-stage process

Biased decisions within the 2 arms of the decision tree in Additional file 1 are mainly a matter of theoretical involvement, whereas the unconditional Blazon I fault and power of the two-stage procedure reflect how the algorithm works in practice. Therefore, we directly assessed the practical consequences of the unabridged 2-stage procedure with respect to the overall, unconditional, Type I fault. This evaluation was additionally motivated by the anticipation that, although the observed provisional Blazon I error rates of both the main parametric examination and the nonparametric test were seriously altered by screening for normality, these results volition rarely occur in practice because the Shapiro-Wilk test is very powerful in large samples. Once again, pairs of samples were generated from exponential, uniform, and normal distributions. Depending on whether the preliminary Shapiro-Wilk examination was significant or not, Mann-Whitney's U examination or Student'southwardt test was conducted in the main analysis. Tabular array 3 outlines the estimated unconditional Type I fault rates. In line with this expectation, the results testify that the ii-stage procedure every bit a whole can be considered robust with respect to the unconditional Type I error rate. This holds true for all 3 distributions considered, irrespectively of the strategy called for the preliminary exam.

Because the two-stage procedure seemed to keep the nominal significance level, we additionally investigated the corresponding statistical power. To this stop, 100,000 pairs of samples were drawn from unit variance normal distributions with means 0.0 and 0.vi, from compatible distributions in [0.0, ane.0] and [0.2, one.two], and from exponential distributions with rate parameters 1.0 and 2.0.

Every bit Table four shows, statistical ability to detect a shift in ii normal distributions corresponds to the weighted sum of the power of the unconditional use of Student'st exam and Isle of mann-Whitney's U test. When both samples must pass the preliminary test for normality (Strategy I), the weights correspond to (1 – α pre)2 and ane – (i – α pre)2 respectively, which is consistent with the rejection charge per unit of the Shapiro-Wilk test under the normality supposition. For Strategy Ii, the weights roughly correspond to one – α pre and α pre respectively (a minimal deviation tin can be expected hither considering the residuals from the two samples are not completely independent). Similar results were observed for shifted uniform distributions and exponential distributions with dissimilar charge per unit parameters: In both distributions, the overall power of the two-stage procedure seemed to lie in-between the power estimated for the unconditional t exam and the U examination.

Word

The appropriateness of a statistical examination, which depends on underlying distributional assumptions, is generally not a problem if the population distribution is known in accelerate. If the assumption of normality is known to be incorrect, a nonparametric test may be used that does non crave normally distributed data. Difficulties arise if the population distribution is unknown—which, unfortunately, is the most common scenario in medical research. Many statistical textbooks and articles state that assumptions should be checked earlier conducting statistical tests, and that tests should be chosen depending on whether the assumptions are met (eastward.g., [22, 28, 47, 48]). Various options for testing assumptions are easily available and sometimes even automatically generated within the standard output of statistical software (e.m., run across SAS or SPSS for the assumption of variance homogeneity for the t exam; for a word meet [42–45]). Similarly, methodological guidelines for clinical trials mostly recommend checking for conditions underlying statistical methods. Co-ordinate to ICH E3, for example, when presenting the results of a statistical analysis, researchers should demonstrate that the data satisfied the crucial underlying assumptions of the statistical exam used [49]. Although it is well-known that controlling after inspection of sample data tin pb to altered Type I and Type 2 mistake probabilities and sometimes to spurious rejection of the null hypothesis, researchers are often confused or unaware of the potential shortcomings of such 2-phase procedures.

Provisional Blazon I error rates

We demonstrated the dramatic furnishings of preliminary testing for normality on the provisional Blazon I error rate of the main exam (see Tables 1 and 2, and Figures 1 and 2). Virtually of these consequences were qualitatively similar for Strategy I (separate preliminary examination for each sample) and Strategy Ii (preliminary test based on residuals), but quantitatively more pronounced for Strategy II than for Strategy I. On the one manus, the results replicated those found for the ane-sample t test [41]. On the other hand, our report revealed interesting new findings: Preliminary testing not simply affects the Blazon I error of the t test on samples from non-normal distributions but also the performance of Mann-Whitney's U test for every bit sized samples from uniform and normal distributions. Since we focused on a two-phase procedure assuming homogenous variances, it tin can exist expected that an additional test for homogeneity of variances should lead to a further baloney of the conditional Type I error rates (e.1000., [39, 42–45]).

Detailed give-and-take on potential reasons for the detrimental effects of preliminary tests is provided elsewhere [30, 41, fifty]; therefore, only a global argument is given here: Exponentially distributed variables follow an exponential distribution, and uniformly distributed variables follow a uniform distribution. This trivial statement holds, regardless of whether a preliminary test for normality is applied to the data or not. A sample or a pair of samples is not usually distributed merely because the upshot of the Shapiro-Wilk test suggests it. From a formal perspective, a sample is a fix of fixed 'realizations'; information technology is not a random variable which could exist said to follow some distribution. The preliminary exam cannot modify this basic fact; it tin can only select samples which appear to be drawn from a normal distribution. If, however, the underlying population is exponential, the preliminary test selects samples that are non representative of the underlying population. Of course, the Type I error rates of hypotheses tests are strongly altered if they are based on unrepresentative samples. Similarly, if the underlying distribution is normal, the pretest will filter out samples that exercise not announced normal with probability α pre. These latter samples are over again not representative for the underlying population, so that the Type I fault of the subsequent nonparametric test will be as affected.

In general, the trouble is that the distribution of the test statistic of the test of interest depends on the outcome of the pretest. More than precisely, errors occurring at the preliminary stage change the distribution of the test statistic at the second stage [38]. As can be seen in Tables ane and 2, the distortion of the Type I mistake observed for Strategy I and II is based on at least two dissimilar mechanisms. The first mechanism is related to the ability of the Shapiro-Wilk test: For the exponential distribution, Strategy I considerably affects the t test, only Strategy II does so even more. As both tables prove, distortion of the Type I fault, if present, is most pronounced in big samples. In line with this effect, Strategy II alters the conditional Type I error to a greater extent than Strategy I, probably because in Strategy Two, the pretest is applied to the collapsed set of residuals, that is, the pretest is based on a sample twice the size of that used in Strategy I.

To illustrate the 2nd mechanism, disproportion, we consider the interesting special case of Strategy I applied to samples from uniform distribution. In Strategy I, Mann-Whitney's U examination was chosen if the pretest for normality failed in at least 1 sample. Large violations of the nominal significance level of Isle of man-Whitney's U examination were observed for small samples and numerically low significance levels for the pretest (23.3% for α pre = .005 and northward = 10). At α pre = .005 and north = x, the Shapiro-Wilk test has depression power, then that only samples with farthermost properties will be identified. In general, withal, samples from the uniform distribution practise not take extreme properties, such that, in nigh cases, just one fellow member of the sample pair will exist sufficiently farthermost to be detected by the Shapiro-Wilk examination. Consequently, pairs of samples are selected by the preliminary test for which i member is farthermost and the other member is representative; the main significance test will then point that the samples differ indeed. For these pairs of samples, the Shapiro-Wilk test and the Isle of mann–Whitney U test essentially yield the same result because they test similar hypotheses. In contrast, in Strategy II, the pretest selected pairs of samples for which the set up of residuals (i.due east., the two samples shifted over each other) appeared non-normal. This event generally corresponds to the standard situation in nonparametric statistics, so that the conditional Type I error rate of Mann-Whitney's U exam applied to samples from uniform distribution was unaffected past the disproportion mechanism.

Type I mistake and power of the entire ii-phase procedure

On the one hand, our study showed that conditional Type I fault rates may heavily deviate from the nominal significance level (Tables ane and 2). On the other hand, direct assessment of the unconditional Type I error rate (Tabular array 3) and power (Tabular array iv) of the two-stage procedure suggests that the two-phase procedure as a whole has acceptable statistical properties. What might exist the reason for this discrepancy? To assess the consequences of preliminary tests for the entire 2-stage process, the power of the pretest needs to be taken into account,

(2)

with P(Blazon I error | Pretest n.s. ) denoting the conditional Type I mistake rate of the t test (Tables 1 and two left), P(Type I error | Pretest sig. ) denoting the provisional Type I error charge per unit of the U test (Tables 1 and 2 right), and P(Pretest sig. ) and P(Pretest n.s. ) denoting the power and 1 – power of the pretest for normality. In Strategy I, P(Pretest sig. ) corresponds to the probability to refuse normality for at least one of the ii samples, whereas in Strategy 2, it is the probability to refuse the assumption of normality of the residuals from both samples.

For the t exam, unacceptable rates of imitation decisions due to option effects of the preliminary Shapiro-Wilk test occur for large samples and numerically high significance levels α pre (e.chiliad., left column in Table 2). In these settings, even so, the Shapiro-Wilk test detects deviations from normality with well-nigh 100% ability, then that the Pupil'due southt test is practically never used. Instead, the nonparametric examination is used that seems to protect the Type I error for those samples. This design of results holds for both Strategy I and Strategy II. Conversely, it was demonstrated above that Mann-Whitney'southward U test is biased for normally distributed data if the sample size is low and the preliminary significance level is strict (e.k., α pre = .005, correct columns of Tables 1 or 2). For samples from normal distribution, however, deviation from normality is merely rarely detected at α pre = .005, so that the consequences for the overall Type I error of the unabridged two-phase process are again very limited.

A similar argument holds for statistical power: For a given alternative, the overall power of the two-stage procedure corresponds, by construction, to the weighted sum of the conditional power of the t test and U test. When populations deviate but slightly from normality, the pretest for normality has low power, and the power of the two-phase process will tend towards the unconditional power of Student'st test; this fact only does not agree in those rare cases in which the preliminary test indicates not-normality, and so that the slightly less powerful Mann–Whitney U exam is practical. When the populations deviate considerably from normality, the power of the Shapiro-Wilk test is high for both strategies, and the overall power of the 2-stage process volition tend towards the unconditional power of Mann-Whitney's U test.

Finally, it should exist emphasized that the conditional Blazon I mistake rates shown in Tables i and 2 stand for to the rather unlikely scenario in which researchers would continue sampling until the assumptions are met. In contrast, the unconditional Type I error and power of the 2-phase procedure are most relevant because in practice, researchers do non go along sampling until they obtain normality. Researchers who practice not know in accelerate whether the underlying population distribution is normal, usually base their decision on the samples obtained. If past gamble a sample from a non-normal distribution happens to look normal, the researcher could falsely assume that the normality assumption holds. Yet, this chance is rather low because of the loftier power of the Shapiro-Wilk test, specially for larger sample sizes.

Conclusions

From a formal perspective, preliminary testing for normality is incorrect and should therefore exist avoided. Normality has to be established for the populations nether consideration; if this is not possible, "support for the supposition of normality must come from extra-data sources" ([30], p. 7). For example, when planning a report, assumptions may be based on the results of before trials [21] or pilot studies [36]. Although often express in size, pilot studies could serve to identify substantial deviations from normality. From a practical perspective, however, preliminary testing does not seem to cause much harm, at least for the cases nosotros have investigated. The worst that can exist said is that preliminary testing is unnecessary: For large samples, the t test has been shown to be robust in many situations [51–55] (see also Tables ane and 2 of the present paper) and for small samples, the Shapiro-Wilk test lacks power to notice deviations from normality. If the application of the t examination is doubtful, the unconditional utilise of nonparametric tests seems to be the best choice [56].

References

-

Altman DG: Statistics in medical journals. Stat Med. 1982, 1: 59-71. 10.1002/sim.4780010109.

-

Altman DG: Statistics in medical journals: Developments in the 1980s. Stat Med. 1991, 10: 1897-1913. 10.1002/sim.4780101206.

-

Altman DG: Statistics in medical journals: Some recent trends. Stat Med. 2000, 19: 3275-3289. 10.1002/1097-0258(20001215)19:23<3275::Assistance-SIM626>3.0.CO;ii-M.

-

Glantz SA: Biostatistics: How to discover, correct and forestall errors in medical literature. Circulation. 1980, 61: 1-7. 10.1161/01.CIR.61.one.i.

-

Pocock SJ, Hughes Physician, Lee RJ: Statistical problems in the reporting of clinical trials—A survey of three medical journals. N Engl J Med. 1987, 317: 426-432. ten.1056/NEJM198708133170706.

-

Altman DG: Poor-quality medical research: What can journals practice?. JAMA. 2002, 287: 2765-2767. 10.1001/jama.287.21.2765.

-

Strasak AM, Zaman Q, Marinell G, Pfeiffer KP, Ulmer H: The use of statistics in medical research: A comparison of The New England Journal of Medicine and Nature Medicine. Am Stat. 2007, 61: 47-55. 10.1198/000313007X170242.

-

Fernandes-Taylor S, Hyun JH, Reeder RN, Harris AHS: Common statistical and research design problems in manuscripts submitted to high-impact medical journals. BMC Res Notes. 2011, 4: 304-x.1186/1756-0500-iv-304.

-

Olsen CH: Review of the utilise of statistics in Infection and Immunity. Infect Immun. 2003, 71: 6689-6692. 10.1128/IAI.71.12.6689-6692.2003.

-

Neville JA, Lang Due west, Fleischer AB: Errors in the Archives of Dermatology and the Journal of the American University of Dermatology from Jan through December 2003. Arch Dermatol. 2006, 142: 737-740. ten.1001/archderm.142.6.737.

-

Altman DG: Practical Statistics for Medical Inquiry. 1991, Chapman and Hall, London

-

Cressie N: Relaxing assumptions in the one sample t-exam. Aust J Stat. 1980, 22: 143-153. 10.1111/j.1467-842X.1980.tb01161.x.

-

Ernst MD: Permutation methods: A ground for verbal inference. Stat Sci. 2004, 19: 676-685. ten.1214/088342304000000396.

-

Wilcox RR: How many discoveries have been lost by ignoring modern statistical methods?. Am Psychol. 1998, 53: 300-314.

-

Micceri T: The unicorn, the normal curve, and other improbable creatures. Psychol Bull. 1989, 105: 156-166.

-

Kühnast C, Neuhäuser M: A annotation on the use of the not-parametric Wilcoxon-Mann–Whitney test in the analysis of medical studies. Ger Med Sci. 2008, vi: 2-5.

-

New England Journal of Medicine: Guidelines for manuscript submission. (Retrieved from http://www.nejm.org/page/author-heart/manuscript-submission); 2011

-

Altman DG, Gore SM, Gardner MJ, Pocock SJ: Statistics guidelines for contributors to medical journals. Br Med J. 1983, 286: 1489-1493. ten.1136/bmj.286.6376.1489.

-

Moher D, Schulz KF, Altman DG for the Consort Grouping: The Consort statement: Revised recommendations for improving the quality of reports of parallel-group randomized trials. Ann Intern Med. 2001, 134: 657-662.

-

Vickers AJ: Parametric versus non-parametric statistics in the analysis of randomized trials with non-normally distributed data. BMC Med Res Meth. 2005, five: 35-10.1186/1471-2288-5-35.

-

ICH E9: Statistical principles for clinical trials. 1998, International Conference on Harmonisation, London, UK

-

Gebski VJ, Keech AC: Statistical methods in clinical trials. Med J Aust. 2003, 178: 182-184.

-

Livingston EH: Who was Pupil and why do we care so much about his t-exam?. J Surg Res. 2004, 118: 58-65. 10.1016/j.jss.2004.02.003.

-

Shuster J: Diagnostics for assumptions in moderate to big unproblematic trials: do they really help?. Stat Med. 2005, 24: 2431-2438. x.1002/sim.2175.

-

Meredith WM, Frederiksen CH, McLaughlin DH: Statistics and data analysis. Annu Rev Psychol. 1974, 25: 453-505. 10.1146/annurev.ps.25.020174.002321.

-

Bancroft TA: On biases in estimation due to the use of preliminary tests of significance. Ann Math Statist. 1944, 15: 190-204. ten.1214/aoms/1177731284.

-

Paull AE: On a preliminary test for pooling mean squares in the analysis of variance. Ann Math Statist. 1950, 21: 539-556. ten.1214/aoms/1177729750.

-

Gurland J, McCullough RS: Testing equality of means after a preliminary test of equality of variances. Biometrika. 1962, 49: 403-417.

-

Freidlin B, Miao Westward, Gastwirth JL: On the use of the Shapiro-Wilk examination in two-phase adaptive inference for paired data from moderate to very heavy tailed distributions. Biom J. 2003, 45: 887-900. 10.1002/bimj.200390056.

-

Easterling RG, Anderson HE: The result of preliminary normality goodness of fit tests on subsequent inference. J Stat Comput Simul. 1978, 8: ane-xi. 10.1080/00949657808810243.

-

Pappas PA, DePuy V: An overview of non-parametric tests in SAS: When, why and how. Proceeding of the. SouthEast SAS Users Group Conference (SESUG 2004): Paper TU04. 2004, Miami, FL, SouthEast SAS Users Group, 1-5.

-

Bogaty P, Dumont S, O'Hara G, Boyer L, Auclair L, Jobin J, Boudreault J: Randomized trial of a noninvasive strategy to reduce hospital stay for patients with low-gamble myocardial infarction. J Am Coll Cardiol. 2001, 37: 1289-1296. 10.1016/S0735-1097(01)01131-7.

-

Holman AJ, Myers RR: A randomized, double-blind, placebo-controlled trial of pramipexole, a dopamine agonist, in patients with fibromyalgia receiving concomitant medications. Arthritis Rheum. 2005, 53: 2495-2505.

-

Lawson ML, Kirk S, Mitchell T, Chen MK, Loux TJ, Daniels SR, Harmon CM, Clements RH, Garcia VF, Inge TH: One-twelvemonth outcomes of Roux-en-Y gastric bypass for morbidly obese adolescents: a multicenter study from the Pediatric Bariatric Written report Group. J Pediatr Surg. 2006, 41: 137-143. 10.1016/j.jpedsurg.2005.ten.017.

-

Norager CB, Jensen MB, Madsen MR, Qvist N, Laurberg South: Upshot of darbepoetin alfa on physical office in patients undergoing surgery for colorectal cancer. Oncology. 2006, 71: 212-220. 10.1159/000106071.

-

Shuster J: Pupil t-tests for potentially aberrant data. Stat Med. 2009, 28: 2170-2184. 10.1002/sim.3581.

-

Schoder V, Himmelmann A, Wilhelm KP: Preliminary testing for normality: Some statistical aspects of a common concept. Clin Exp Dermatol. 2006, 31: 757-761. 10.1111/j.1365-2230.2006.02206.x.

-

Wells CS, Hintze JM: Dealing with assumptions underlying statistical tests. Psychol Sch. 2007, 44: 495-502. 10.1002/pits.20241.

-

Rasch D, Kubinger KD, Moder Thousand: The ii-sample t test: pretesting its assumptions does non pay. Stat Papers. 2011, 52: 219-231. 10.1007/s00362-009-0224-x.

-

Zimmerman DW: A simple and effective decision rule for choosing a significance test to protect against non-normality. Br J Math Stat Psychol. 2011, 64: 388-409. 10.1348/000711010X524739.

-

Schucany WR, Ng HKT: Preliminary goodness-of-fit tests for normality do not validate the i-sample pupil t. Commun Stat Theory Methods. 2006, 35: 2275-2286. x.1080/03610920600853308.

-

Zimmerman DW: Some properties on preliminary tests of equality of variances in the 2-sample location problem. J Gen Psychol. 1996, 123: 217-231. 10.1080/00221309.1996.9921274.

-

Zimmerman DW: Invalidation of parametric and nonparametric statistical tests by concurrent violation of two assumptions. J Exp Educ. 1998, 67: 55-68. ten.1080/00220979809598344.

-

Zimmerman DW: Conditional probabilities of rejecting H0 past pooled and separate-variances t tests given heterogeneity of sample variances. Commun Stat Simul Comput. 2004, 33: 69-81. ten.1081/SAC-120028434.

-

Zimmerman DW: A note on preliminary tests of equality of variances. Br J Math Stat Psychol. 2004, 57: 173-181. 10.1348/000711004849222.

-

R Development Core Squad: R: A language and environment for statistical computing. 2011, R Foundation for Statistical Computing, Vienna, Austria

-

Lee AFS: Student's t statistics. Encyclopedia of Biostatistics. Edited by: Armitage P, Colton T. 2005, Wiley, New York, 2

-

Rosner B: Fundamentals of Biostatistics. 1990, PWS-Kent, Boston, iii

-

ICH E3: Structure and content of clinical written report reports. 1995, International Conference on Harmonisation, London, UK

-

Rochon J, Kieser M: A closer wait at the effect of preliminary goodness-of-fit testing for normality for the one-sample t-exam. Br J Math Stat Psychol. 2011, 64: 410-426. 10.1348/2044-8317.002003.

-

Armitage P, Berry One thousand, Matthews JNS: Statistical Methods in Medical Research. 2002, Blackwell, Malden, MA

-

Boneau CA: The effects of violations underlying the t test. Psychol Bull. 1960, 57: 49-64.

-

Box GEP: Not-normality and tests of variances. Biometrika. 1953, twoscore: 318-335.

-

Rasch D, Guiard V: The robustness of parametric statistical methods. Psychology Science. 2004, 46: 175-208.

-

Sullivan LM, D'Agostino RB: Robustness of the t test applied to data distorted from normality past floor effects. J Dent Res. 1992, 71: 1938-1943. 10.1177/00220345920710121601.

-

Akritas MG, Arnold SF, Brunner Eastward: Nonparametric hypotheses and rank statistics for unbalanced factorial designs. J Am Stat Assoc. 1997, 92: 258-265. 10.1080/01621459.1997.10473623.

Pre-publication history

-

The pre-publication history for this paper tin can be accessed here:http://www.biomedcentral.com/1471-2288/12/81/prepub

Writer information

Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

All authors jointly designed the study. JR carried out the simulations, MG assisted in the simulations and creation of the figures. JR and MG drafted the manuscript. MK planned the study and finalized the manuscript. All authors read and approved the concluding manuscript.

Electronic supplementary cloth

Authors' original submitted files for images

Rights and permissions

This article is published under license to BioMed Primal Ltd. This is an Open Access article distributed nether the terms of the Creative Commons Attribution License(http://creativecommons.org/licenses/past/2.0), which permits unrestricted employ, distribution, and reproduction in any medium, provided the original piece of work is properly cited.

Reprints and Permissions

Near this article

Cite this commodity

Rochon, J., Gondan, K. & Kieser, Thou. To test or non to test: Preliminary assessment of normality when comparison two independent samples. BMC Med Res Methodol 12, 81 (2012). https://doi.org/ten.1186/1471-2288-12-81

-

Received:

-

Accustomed:

-

Published:

-

DOI : https://doi.org/10.1186/1471-2288-12-81

Keywords

- Testing for normality

- Student'st examination

- Mann-Whitney'south U test

Source: https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/1471-2288-12-81

0 Response to "What Assumptions Are Required for the Two Sample T-test Presented Here to Be Appropriate?"

Post a Comment